Aaron Shepard endeavors to examine the roots of the failures of the Guantanamo military commissions and suggest potential solutions to remedy them. His paper begins with an introduction to the concept of military commissions, including a brief overview of their historic utilization and import. It then provides a detailed background on Guantanamo Bay, covers the… Continue reading Commissions Impossible: How Can Future Military Commissions Avoid the Failures of Guantanamo?

Tag: Counterterrorism Law

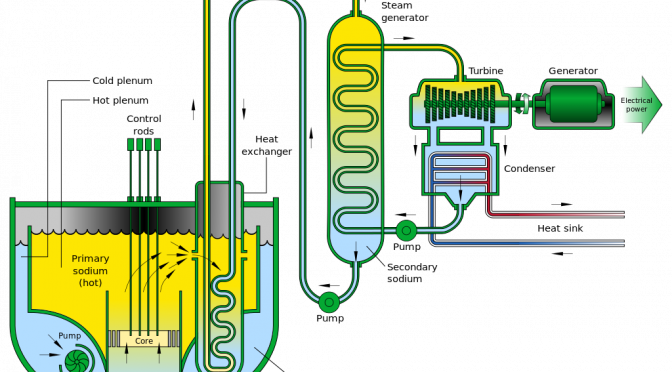

Advanced Reactors and Nuclear Terrorism: Rethinking the International Framework

While nuclear energy today provides about 10% of global electricity generation in reliable, carbon-free form, the immense destruction tied to its origins casts a long shadow. This tension between terrible and peaceful power underlies the expansive non-proliferation regime of international law, a framework meant to keep nuclear technology from being diverted from this peaceful use… Continue reading Advanced Reactors and Nuclear Terrorism: Rethinking the International Framework

Managing the Terrorism Threat with Drones

The contours of America’s counterterrorism strategy against al Qaeda and the Islamic State have remained remarkably consistent over the past two decades, broadly focused on degrading al Qaeda’s and the Islamic State’s external attack capabilities and global networks, disrupting their operations through military operations or enhanced law enforcement and border security, and denying them sanctuaries.… Continue reading Managing the Terrorism Threat with Drones